Yep. I'm glad you asked *smile*

Instead of starting from scratch, I figured the right approach would be to capitalize on work done by others. And by taking that approach, I found an article at http://knol.google.com/k/creating-custom-controls-with-c-net# which made my job easy. The article spells out how to create a custom progress bar for your application. Seems like something useful huh?

Getting Started

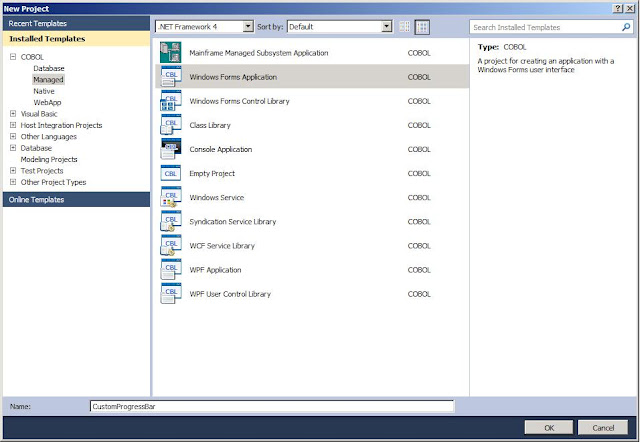

First I created the same project using the same name, but instead of C#, I selected to create a managed COBOL Windows Forms Application (didn't want to strain too much by thinking too hard too soon of course).

I then added the reference to the new project as directed and was ready to create the control. As with the C# example, Visual COBOL created a shell class program for me to use as a base. And as in the example, I deleted it because I wouldn’t be using it.

I then added the reference to the new project as directed and was ready to create the control. As with the C# example, Visual COBOL created a shell class program for me to use as a base. And as in the example, I deleted it because I wouldn’t be using it.- BackColor: Window

- BorderStyle: FixedSingle

- Size: 148, 14

- Double-Buffered: True

Since I used COBOL, you’ll notice the code behind page that was created for the ProgressControl is very similar to the C# version.

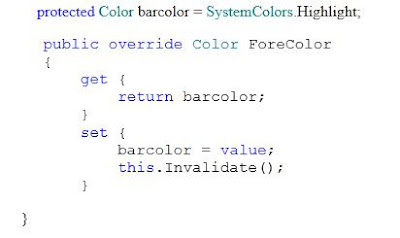

Next, I added the code to allow for the choosing of a foreground color for the progress bar and added the “System.Drawing” namespace to the program so that I didn’t have to fully qualify things like “System::Drawing::Color” in my COBOL code. I just want to type “Color” same as Dave did in the C# routine. To do this, I typed the appropriate $set statement at the top of the program. While I was there, I also added a line for “System.Windows.Forms” because we’ll need it later.

You’ll also see I added the variable definition to the working storage section for barcolor. In the original C# example, Dave typed:

The biggest difference is that I had to write a bit more code for the get and set methods than what the C# had. Not much, but I had to spell out each method, along with its own working storage, procedure division, etc.

Once that portion was coded, I had to make a slight change to the code to create the Value property. Because Value is a reserved word in COBOL. So, instead of using “Value” as a variable name, I used pvalue. I placed the the working storage definition directly after the definition for barcolor (see above).

I used COMP-1 because this is the COBOL equivalent of a short Float. And as with the ForeColor get and set methods above, I wrote the two methods to do what the C# code does:

Two items may stand out here. The first is the invoke statement “invoke super::OnPaint(e)”. Simply put, C# uses the keyword “base”, while COBOL uses “super” to refer to the original superset or base version of the method. Dave’s article points out that this particular statement is optional in his simple example but recommended for more complex paint routines. If I had used “self” instead of “super”, the code would be referring to the current version of the method (the code shown above). That would cause a recursive loop where every time the method was called, the first thing it would do is call itself. That situation ultimately consumes the memory of the machine (guess how I know *smile*) causing Visual Studio to abend.

Of course I left out all the mistakes I made trying to decipher the C#, but I never claimed to be a C# programmer now did I? That’s what I call my buddy Mike for. *smile*

Hopefully you find this of interest / value. If you find any errors in my code or suggestions on how to make this better, by all means, please share! All contributions greatly appreciated!